During my final year in Cambridge I had the opportunity to work on the project that I wanted to implement for the last three years: a glasses-free 3D display.

1. Introduction

It all started when I saw Johnny Lee's "Head Tracking for Desktop VR Displays using the Wii Remote" project in early 2008 (see below). He cunningly used the infrared camera in the Nintendo Wii's remote and a head mounted sensor bar to track the location of the viewer's head and render view dependent images on the screen. He called it a "portal to the virtual environment".

Johnny Lee's project "Head Tracking for Desktop VR Displays using the Wii Remote".

I always thought that it would be really cool to have this behaviour without having to wear anything on your head (and it was - see the video below!).

My "portal to the virtual environment" which does not require head gear. And it has 3D Tetris!

I am a firm believer in three-dimensional displays, and I am certain that we do not see the widespread adoption of 3D displays simply because of a classic network effect (also know as "chicken-and-egg" problem). The creation and distribution of a three-dimensional content is inevitably much more expensive than a regular, old-school 2D content. If there is no demand (i.e. no one has a 3D display at home/work), then the content providers do not have much of an incentive to bother creating the 3D content. Vice versa, if there is no content then consumers do not see much incentive to invest in (inevitably more expensive) 3D displays.

A "portal to the virtual environment", or as I like to call it, a 2.5D display could effectively solve this. If we could enhance every 2D display to get what you see in Johnny's and my videos (and I mean every: LCD, CRT, you-name-it), then suddenly everyone can consume the 3D content even without having the "fully" 3D display. At that point it starts making sense to mass-create 3D content.

The terms "fully" and 2.5D, however, require a bit of explanation.

1.1. Human depth perception

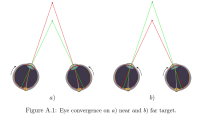

You see, human depth perception comes from a variety of sensory cues. Some of them are come from our ability to sense the position of our eyes and the tension in our eye muscles ("oculomotor" cues). For example, when the object of focus moves closer to the eye, we can feel the eye moving inwards (i.e. we feel the extraocular muscles stretching). This is a so called "convergence" depth cue.

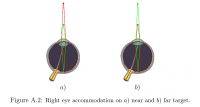

Another kinesthetic sensation arises from the change in the shape of the eye lens that occurs when the sight is focused on the objects at different distances (called "accommodation"). In this case, ciliary muscles stretch the lens making it thinner and changing the eye's focal length. These kinesthetic sensations (processed in the visual cortex) serve as the basic cues for distance interpretation.

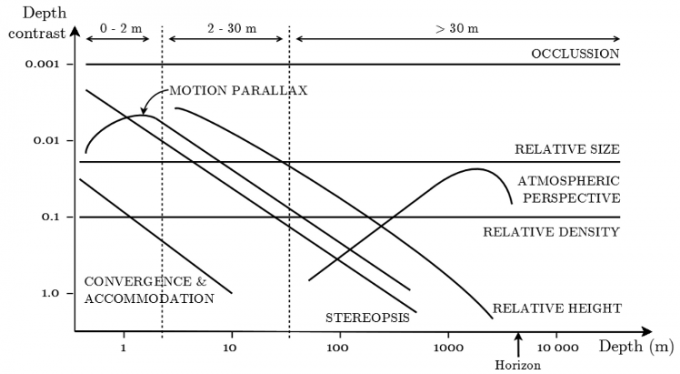

Johnny's and my displays are not able to simulate these oculomotor cues. In fact, the increased depth perception seen in the videos above comes from a monocular (read: single eye) motion cue, called "motion parallax". Fancy name aside, motion parallax simply means that the objects closer to the moving observer seem to move faster (and in opposite direction to the movement of the observer), whereas the objects farther away move slower (and in the same direction). However, motion parallax alone is not enough to create a full 3D impression.

In the average adult human, the eyes are horizontally separated by about 6 cm, hence even when looking at the same scene, the images formed on the retinae are different. The difference in the images between the left and the right eyes (called "binocular disparity") is actually translated into depth perception in the brain (in striate cortex and higher up in the visual system), creating a "stereopsis" depth cue.

As you will see in a figure below (by Cutting and Vishton, 1995), stereopsis and motion parallax are the two most important depth cues in the near distance (< 2 meters).

The fact that we are not able to simulate the stereopsis depth cue on a standard LCD/CRT/etc display is one of the main reasons why I am calling such displays 2.5-dimensional (nevertheless, they are still really exciting!).

So, how did I create my 2.5D display?

2. 2.5D display implementation

Well, initially I thought of using just a standard webcam to try to infer the viewer's distance from the camera using a whole bunch of cunning calibration and computer vision techniques. However, my supervisor-to-be, Neil Dodgson (as it turns out, a chair of international Stereoscopic Displays & Applications conferences in 2006, 2007, 2010 and 2011, and a pretty cool dude in general; you can check out his blog here) suggested using Microsoft Kinect.

Kinect's projected IR dot pattern (taken from here)

The neat piece of hardware that actually makes Kinect exciting is an IR depth-finding camera. It means that for (almost) each pixel in the video stream, Kinect can determine its distance from the camera essentially by looking at the distortions of the projected IR dot pattern. Combined with some clever machine learning, this feature enables Kinect to track the positions of twenty major joints of the user's body in real-time (called skeletal tracking).

However, for skeletal tracking Kinect requires the whole person to be visible in the sensor's field of view - not a very realistic requirement, especially in desktop PC environments.

The final idea was simple:

- Use Viola and Jones face detector to detect the viewer's face in the colour (RGB) image.

- Use the enhanced CAMShift face tracker to track it until the first loss, after which use V-J face detector again to re-detect the face.

- Use ViBe background subtractor to get rid of the nearly-static background to help with the tracking.

- In parallel, to exploit the depth data coming from Kinect, use Garstka and Peters depth-based head detector and a modified CAMShift tracker to track the head.

- Merge the colour- and depth-based tracker predictions, filter the noise using some impulse/high-pass filters and... Bob's your uncle!

(Seriously, though, if you are interested in the actual technology behind it, drop me an e-mail at manfredas@zabarauskas.com and I might be able to provide you with my actual 167-page thesis, containing all the nitty-gritty details.)

Because I had decided to write everything from scratch, I also had to implement the distributed training framework for Viola-Jones using AsymBoost (in the image below you can see the Cambridge Computer Lab machines piling through more than 32 million non-face images in order to "learn" the differences between a human face and, say, a chair).

After the training, there were 42 misclassifications out of 32.9 million non-face images in total (three examples of non-face images misclassified as faces are shown on the left).Besides this, a whole bunch of evaluation software had to be implemented: recording and replaying Kinect depth and video streams, tools to help with the ground-truth tagging of depth and colour evaluation videos, Viola-Jones framework evaluators, and so on.

3. Final outcome

So, what was the result?

Well, during 10 minutes of evaluation recordings (containing unconstrained viewer’s head movement in six degrees-of-freedom, in presence of occlusions, changing facial expressions, different backgrounds and varying lighting conditions) the combined head-tracker was able to predict the viewer’s head center location within less than 1/3 of head's size from the actual head center on average!

It was running at 28.24 FPS (limited only by Kinect's frame rate of 30 FPS) using 56.8% of a single Intel i5-2410M CPU @ 2.30 GHz core (with hyperthreading enabled).The project has been highly-commended by the University of Cambridge Faculty of Computer Science and Technology (a fancy name for the Computer Lab), and the international Undergraduate Awards 2012 (an achievement which has received a couple of mentions on my college's and the Computer Lab's websites).

All in all, I managed to accomplish something that I wanted to do for a long time. Eventually, I might publish the code and the details of the technology, but there is still work to be done, so don't hold your breath.I firmly believe that under the right circumstances the capabilities of devices like Kinect could be world-changing. And to be honest, there is a good chance that I might have a small part in that effort in the nearest future. But that is a story for another blog post.